Remember the last spring-clean you gave your CMS? If you can’t, you’re not alone. Most corporate sites look like attics packed with unlabelled boxes: PDF whitepapers nobody opens, blog posts living in three different taxonomies, press releases from the pre-GDPR era. Human visitors already find that annoying; for large language models it’s junk food with zero nutrition. Google now states openly that it relies on structured data to slot your content into its Knowledge Graph before it can cite you at all. Analysts have shown that properly marked-up pages can cut hallucination rates for generative answers in half. If we keep treating our digital assets as loose piles of pixels, chatbots will either overlook our meticulously crafted know-how or, worse, stitch it together incorrectly. The answer is not more content but better scaffolding. Without clean sitemaps, solid canonicals, tidy slugs and healthy JSON-LD blocks even the sharpest insight turns into a zombie in the index. So before the next campaign launches, audit the material you already have and anchor it so firmly in your information architecture that both people and machines know exactly which shelf to reach for. That way your expertise becomes a citable node in tomorrow’s knowledge network rather than digital dust in some semantic matrix.

Structure Beats Volume: Knowledge Graphs as Vitamin Boosters for LLMs

Language is messy; machines crave order. That is why more and more organisations weave large language models together with internal knowledge graphs or specialised small language models. Studies reveal that systems such as Gemini enrich their answers explicitly with structured schemas. Publishing product pages without Product schema or job ads without JobPosting markup is like feeding the models empty calories. For enterprise CMOs the takeaway is clear: exports from PIM, CRM or DAM systems must be not only complete but also semantically precise and machine-readable. In WordPress this is already possible via WPGraphQL, ACF-to-REST or Yoast, which inject raw data as JSON-LD and make it immediately available on headless endpoints. The result is a data layer that agents can query through retrieval-augmented generation instead of rummaging through free-text. The clearer you label each entity, product ID, author profile, location code, the less prompt gymnastics you need later because the model already knows where the truth lives. A happy side effect: consistently defined entities reduce internal duplicates and simplify reporting to the boardroom.

SEO in the Agentic Age: From Rankings to Recall Signals

Deloitte expects that by 2025 one quarter of Gen-AI teams will be running agentic pilots and that by 2027 one company in two will rely on autonomous workflows. Once such agents start making purchase decisions on their own, they will depend on data frameworks, not eye-catching landing pages. Classic SEO metrics like page speed and internal linking stay vital because they help machines quantify trust. The big shift is that a top-three organic spot used to guarantee visibility, whereas today only clean schema earns you a berth in a model’s long-term memory. Visibility turns into memorability. For content teams this means every paragraph now needs a semantic twin and every image a descriptive entity tag. Keywords give way to consistency rules: same product, same SKU, same price across every channel. Ignore that discipline and an autonomous procurement bot may buy from your competitor simply because their dataset is cleaner.

From Content Chaos to Scalable Data Layer: The Five-Step Roadmap

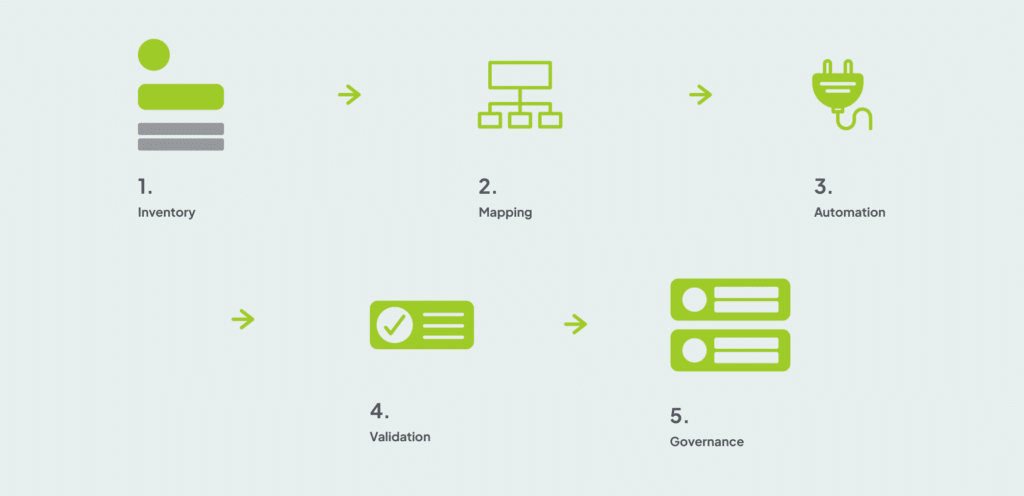

How do you pull off the metamorphosis?

- Step one: inventory. Compile a complete list of content sources—WordPress posts, PDF archives, media libraries, product tables.

- Step two: mapping. Assign each source an appropriate schema vocabulary; Google even recommends custom PageMaps when standard types fall short.

- Step three: automation. Deploy middleware or WordPress plugins that inject schema on every publish event.

- Step four: validation. Use the Rich Result Test not just for technical errors but for logic gaps and mismatches between frontend and source systems.

- Step five: governance. Give every data domain an owner and schedule semi-annual audits so the shiny new layer does not revert to a graveyard.

Industry experts remind us that structured data is not a direct ranking factor, yet generative models lose precision fast without it. In practice I see budgets shifting toward semantic pipelines, GraphQL gateways and schema monitoring, because that is where real impact lives. Companies that invest now build an information supply chain that pleases both screen readers and agentic AI.

KPI Radar and Governance: Proving the Value of Your Data Refactor

A data layer is no vanity project. Leadership teams want numbers. The first metric is coverage score, the percentage of pages carrying valid schema. Second is retrieval precision: how often do external or internal models actually surface your entities during prompt testing? Third is hallucination drop, measured by regular QA prompts you run against ChatGPT or in-house LLMs; a fifteen-percent decline over three quarters shows your structure is landing. Fourth is conversion assistance: the share of transactions initiated through chat or voice widgets that rely on your data layer. Fifth is content velocity, the reduction in production time per page release once templates auto-inject schema. Frame these metrics in your OKR cycle and report quarterly. You will not only ship better content but also prove that machines are finding it, which speaks to even the most sceptical CFO ear.

Outlook for WordPress Agencies: From Page Builder to Data Architect

Anyone still thinking a WordPress agency’s job is to stack pretty themes and tweak some plugins is missing the new weight class. In the agentic era we shift from page builders to data architects, operating at board level. Our mission is no longer to drop sliders on a home page but to make sure every entity on an enterprise site feeds a robust knowledge graph: product SKU, author profile, location code and even temporary campaign discounts. That requires deep expertise in WPGraphQL, ACF schemas and deployment pipelines, along with solid chops in data governance and compliance. After all, what good is the cleanest JSON-LD if a price change in the ERP never finds its way online? This is precisely where a modern WordPress agency shines: we bind CMS, PIM and commerce back ends into a single data backbone that remains intuitive for humans yet serves as a rock-solid source for every agentic system. Do it right and you gain not just stronger SEO signals and lower hallucination rates but also a tangible competitive edge. While rivals slap prompt band-aids on shaky content, our clients are already exposing APIs that LLMs trust implicitly. In short, the WordPress agency that thinks in data layers graduates from vendor of web pages to integration partner orchestrating enterprise value creation and AI ecosystems in the same breath.

Ready to gain the competitive edge with Syde?

Related articles

-

Why Choosing the Right CMS Can Make or Break Your Website

When it comes to building a website that actually works for your business, the CMS isn’t just another tool, it’s the backbone of the entire operation.

-

Rethinking WordPress Multisite: Simpler, Smarter, More User-Friendly

When WordPress 3.0 was released back in 2010, named Thelonious after jazz pianist Thelonious Monk, it introduced a feature that fascinated me immediately: Multisite.